MobileNetV2: Inverted Residuals and Linear Bottlenecks

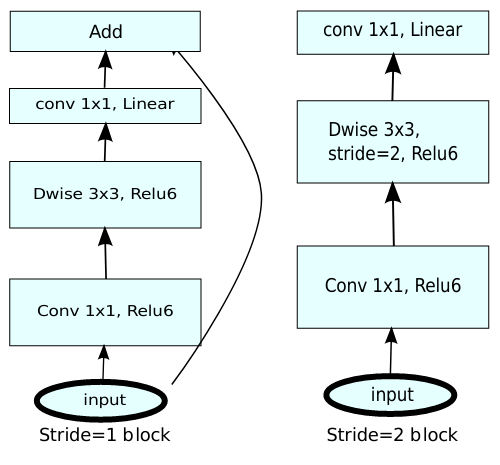

In this paper we describe a new mobile architecture, MobileNetV2, that improves the state of the art performance of mobile models on multiple tasks and benchmarks as well as across a spectrum of different model sizes. We also describe efficient ways of applying these mobile models to object detection in a novel framework we call SSDLite. Additionally, we demonstrate how to build mobile semantic segmentation models through a reduced form of DeepLabv3 which we call Mobile DeepLabv3. The MobileNetV2 architecture is based on an inverted residual structure where the input and output of the residual block are thin bottleneck layers opposite to traditional residual models which use expanded representations in the input an MobileNetV2 uses lightweight depthwise convolutions to filter features in the intermediate expansion layer. Additionally, we find that it is important to remove non-linearities in the narrow layers in order to maintain representational power. We demonstrate that this improves performance and provide an intuition that led to this design. Finally, our approach allows decoupling of the input/output domains from the expressiveness of the transformation, which provides a convenient framework for further analysis. We measure our performance on Imagenet classification, COCO object detection, VOC image segmentation. We evaluate the trade-offs between accuracy, and number of operations measured by multiply-adds (MAdd), as well as the number of parameters.

@inproceedings{sandler2018mobilenetv2,

title={Mobilenetv2: Inverted residuals and linear bottlenecks},

author={Sandler, Mark and Howard, Andrew and Zhu, Menglong and Zhmoginov, Andrey and Chen, Liang-Chieh},

booktitle={Proceedings of the IEEE conference on computer vision and pattern recognition},

pages={4510--4520},

year={2018}

}| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | mIoU | mIoU(ms+flip) | config | download |

|---|---|---|---|---|---|---|---|---|---|

| FCN | M-V2-D8 | 512x1024 | 80000 | 3.4 | 14.2 | 70.16 | 72.1 | config | model | log |

| PSPNet | M-V2-D8 | 512x1024 | 80000 | 3.6 | 11.2 | 70.23 | - | config | model | log |

| DeepLabV3 | M-V2-D8 | 512x1024 | 80000 | 3.9 | 8.4 | 73.84 | - | config | model | log |

| DeepLabV3+ | M-V2-D8 | 512x1024 | 80000 | 5.1 | 8.4 | 75.20 | - | config | model | log |

| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | mIoU | mIoU(ms+flip) | config | download |

|---|---|---|---|---|---|---|---|---|---|

| FCN | M-V2-D8 | 512x512 | 160000 | 6.5 | 64.4 | 19.71 | - | config | model | log |

| PSPNet | M-V2-D8 | 512x512 | 160000 | 6.5 | 57.7 | 29.68 | - | config | model | log |

| DeepLabV3 | M-V2-D8 | 512x512 | 160000 | 6.8 | 39.9 | 34.08 | - | config | model | log |

| DeepLabV3+ | M-V2-D8 | 512x512 | 160000 | 8.2 | 43.1 | 34.02 | - | config | model | log |