Segmentation - identifying which image pixels belong to an object - is a core task in computer vision and is used in a broad array of applications, from analyzing scientific imagery to editing photos. But creating an accurate segmentation model for specific tasks typically requires highly specialized work by technical experts with access to AI training infrastructure and large volumes of carefully annotated in-domain data. Reducing the need for task-specific modeling expertise, training compute, and custom data annotation for image segmentation is the main goal of the Segment Anything project.

The Segment Anything Model (SAM) predicts object masks given prompts that indicate the desired object. SAM has learned a general notion of what objects are, and it can generate masks for any object in any image or any video, even including objects and image types that it had not encountered during training. SAM is general enough to cover a broad set of use cases and can be used out of the box on new image “domains” (e.g. underwater photos, MRI or cell microscopy) without requiring additional training (a capability often referred to as zero-shot transfer).

Previously, to solve any kind of segmentation problem, there were two classes of approaches. The first, interactive segmentation, allowed for segmenting any class of object but required a person to guide the method by iterative refining a mask. The second, automatic segmentation, allowed for segmentation of specific object categories defined ahead of time (e.g., cats or chairs) but required substantial amounts of manually annotated objects to train (e.g., thousands or even tens of thousands of examples of segmented cats), along with the compute resources and technical expertise to train the segmentation model. Neither approach provided a general, fully automatic approach to segmentation.

Segment Anything Model is a generalization of these two classes of approaches. It is a single model that can easily perform both interactive segmentation and automatic segmentation. This notebook shows an example of how to convert and use Segment Anything Model in OpenVINO format, allowing it to run on a variety of platforms that support an OpenVINO.

The notebook demonstrates how to work with model in 2 modes:

-

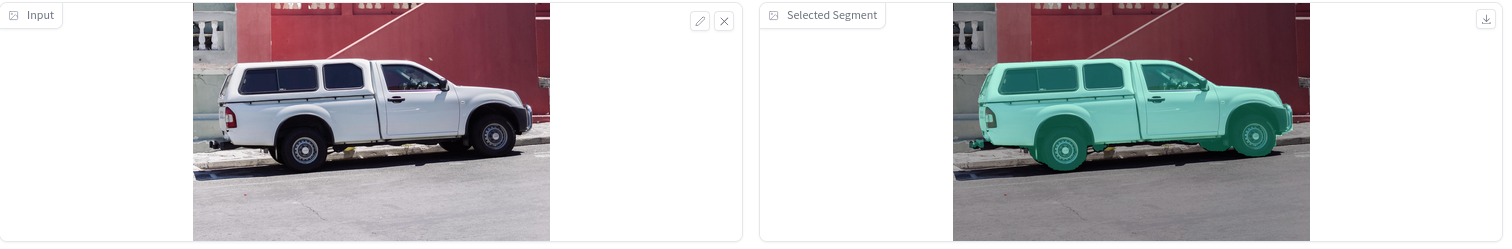

Interactive segmentation mode: in this demonstration you can upload image and specify point related to object using Gradio interface and as the result you get segmentation mask for specified point. The following image shows an example of the input text and the corresponding predicted image.

-

Automatic segmentation mode: masks for the entire image can be generated by sampling a large number of prompts over an image.

This notebook shows an example of how to convert and use Segment Anything Model using OpenVINO

Notebook contains the following steps:

- Convert PyTorch models to OpenVINO format.

- Run OpenVINO model in interactive segmentation mode.

- Run OpenVINO model in automatic mask generation mode.

- Run NNCF post-training optimization pipeline to compress the encoder of SAM

This is a self-contained example that relies solely on its own code.

We recommend running the notebook in a virtual environment. You only need a Jupyter server to start.

For details, please refer to Installation Guide.