-

-

Notifications

You must be signed in to change notification settings - Fork 15.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Why is 'del' called explicitly? #5842

Comments

|

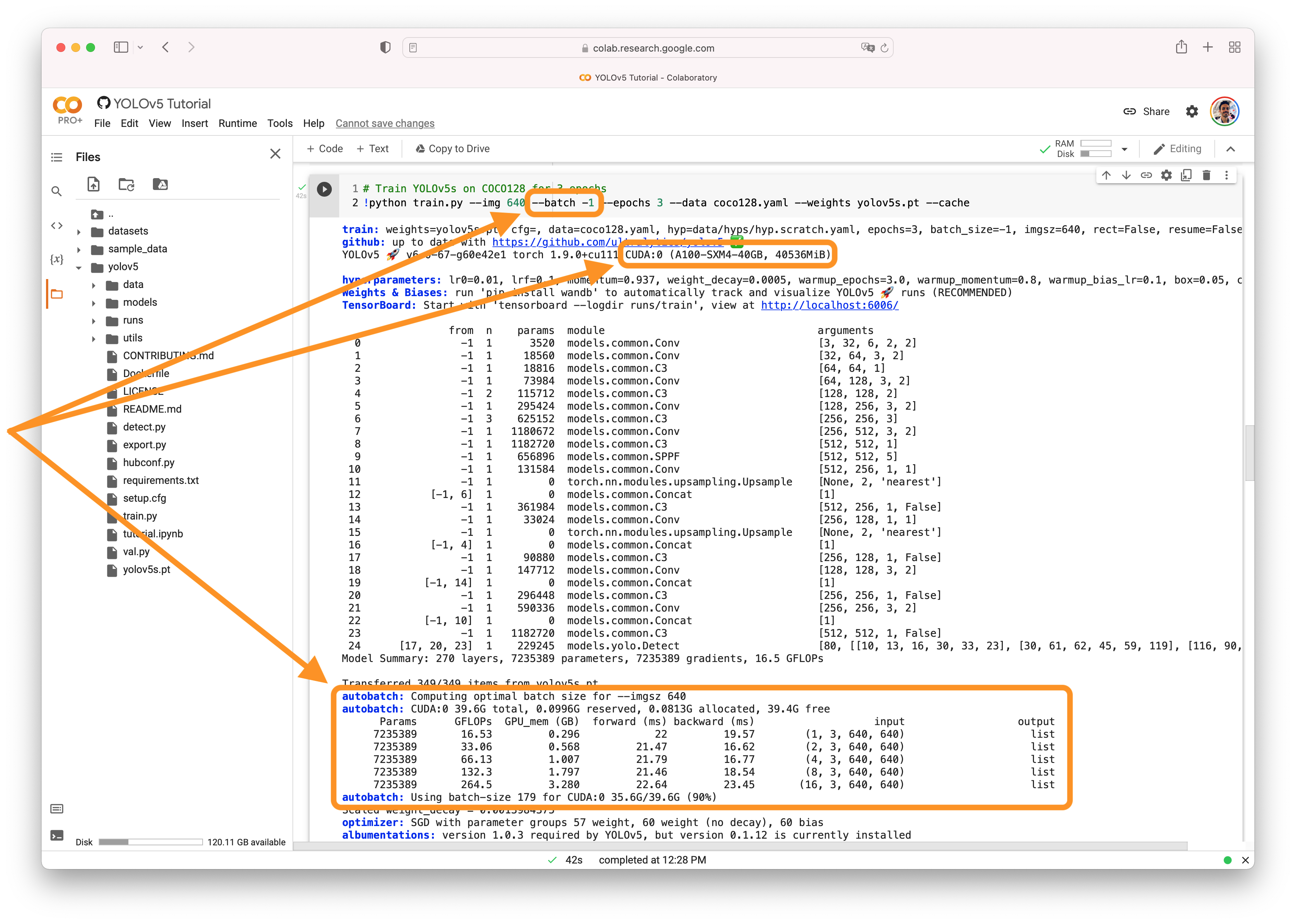

@developer0hye deleting a variable frees memory. AutoBatchYou can use YOLOv5 AutoBatch (NEW) to find the best batch size for your training by passing Good luck and let us know if you have any other questions! |

|

Thanks for your reply! AutoBatch seems good! However, when I ran the code uploaded on this issue, I couldn't set more batchsize with 'del' comapred to omitting 'del'. The results show that calling 'del' doesn't save memory usage. I am still confusing. |

|

@developer0hye they're not related. Variables are typically stored in RAM. Batch size is correlated with CUDA memory. |

|

@glenn-jocher |

yolov5/train.py

Line 199 in bc48457

@glenn-jocher

Hi jocher!

Why is 'del' called explicitly?

When I ran the below code with and without

del ckpt, it printed same maximum batch size.Can it save memory usage?

If so, can we set larger batch size?

Weight Link: https://github.com/Megvii-BaseDetection/YOLOX/releases/download/0.1.1rc0/yolox_x.pth

The text was updated successfully, but these errors were encountered: