Neural formant synthesis using differtiable resonant filters and source-filter model structure.

Authors: Lauri Juvela, Pablo Pérez Zarazaga, Gustav Eje Henter, Zofia Malisz

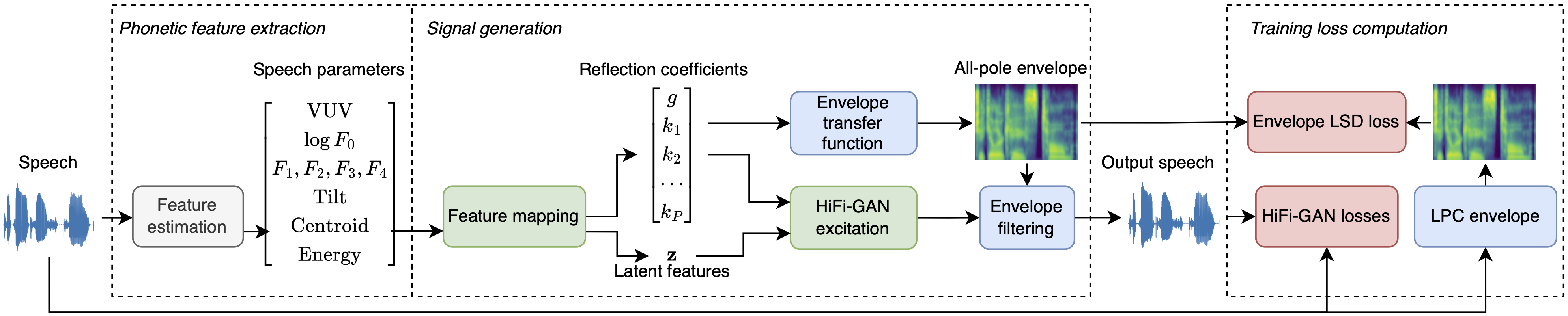

We present a model that performs neural speech synthesis using the structure of the source-filter model, allowing to independently inspect and manipulate the spectral envelope and glottal excitation:

A description of the presented model and sound samples compared to other synthesis/manipulation systems can be found in the project's demo webpage

First, we need to create a conda environment to install our dependencies. Use mamba to speed up the process if possible.

mamba env create -n neuralformants -f environment.yml

conda activate neuralformantsPre-trained models are available in HuggingFace, and can be downloaded using git-lfs. If you don't have git-lfs installed (it's included in environment.yml), you can find it here. Use the following command to download the pre-trained models:

git submodule update --init --recursiveInstall the package in development mode:

pip install -e .GlotNet is included partially for WaveNet models and DSP functions. Full repository is available here

HiFi-GAN is included in the hifi_gan subdirectory. Original source code is available here

We provide a script to run inference on the end-to-end architecture, such that an audio file can be provided as input and a wav file with the manipulated features is stored as output.

Change the feature scaling to modify pitch (with F0) or formants. The scales are provided as a list of 5 elements with the following order:

[F0, F1, F2, F3, F4]An example with the provided audio samples from the VCTK dataset can be run using:

HiFi-Glot

python inference_hifiglot.py \

--input_path "./Samples" \

--output_path "./output/hifi-glot" \

--config "./checkpoints/HiFi-Glot/config_hifigan.json" \

--fm_config "./checkpoints/HiFi-Glot/config_feature_map.json" \

--checkpoint_path "./checkpoints/HiFi-Glot" \

--feature_scale "[1.0, 1.0, 1.0, 1.0, 1.0]"NFS

python inference_hifigan.py \

--input_path "./Samples" \

--output_path "./output/nfs" \

--config "./checkpoints/NFS/config_hifigan.json" \

--fm_config "./checkpoints/NFS/config_feature_map.json" \

--checkpoint_path "./checkpoints/NFS" \

--feature_scale "[1.0, 1.0, 1.0, 1.0, 1.0]"NFS-E2E

python inference_hifigan.py \

--input_path "./Samples" \

--output_path "./output/nfs-e2e" \

--config "./checkpoints/NFS-E2E/config_hifigan.json" \

--fm_config "./checkpoints/NFS-E2E/config_feature_map.json" \

--checkpoint_path "./checkpoints/NFS-E2E" \

--feature_scale "[1.0, 1.0, 1.0, 1.0, 1.0]"Training of the HiFi-GAN and HiFi-Glot models is possible with the end-to-end architecture by using the the scripts train_e2e_hifigan.py and train_e2e_hifiglot.py.

Citation information will be added when a pre-print is available.