-

-

Notifications

You must be signed in to change notification settings - Fork 39

Dataset Augmentation

Data augmentation is an explicit form of regularization that is widely used in the training of deep CNN like ESRGANs. It's purpose is to artificially enlarge the training dataset from existing data using various translations and transformations, such as, rotation, flipping, cropping, adding noises, blur, and others. In this sense, the idea is to expose the model to more information than what is available, so it is able to explore the input and parameter space.

The two most popular and effective data augmentation methods in training of deep CNN are random flipping (horizontal or vertical mirror) and random cropping. Random flipping randomly flips the input image horizontally (vertical flips may result in networks being unable to behave correctly, like becoming unable to differentiate a 6 from a 9 or differentiating from up and down), while random cropping extracts random sub-patch from the input image.

While some options are typically used and have been proven to be useful, augmentation is typically performed by trial and error, and the types of augmentation performed are limited to the imagination, time, and experience of the user and according to the desired task for which the model is being designed. Often, the choice of augmentation strategy can be more important than the type of network architecture used.

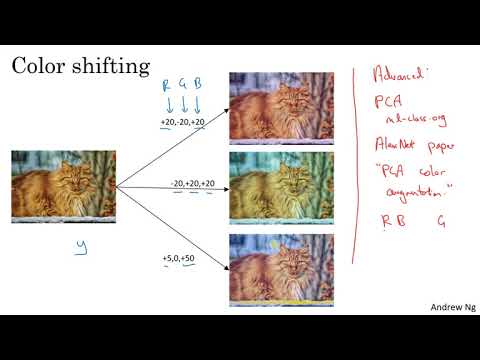

Some more information about Data Augmentation can be found in Andrew Ng's excellent course on deep learning:

In this repository, a much larger set of options for dataset augmentation has been added, among which there are: Noise ("lr_noise", "lr_noise_types"):

- gaussian (will randomly add gaussian noise with a sigma from 4 to 220, which is heavy, can be adjusted in the augmentations.py file)

- JPEG (will randomly compress the image to between 10% and 50% quality, can be adjusted in the augmentations.py file)

- quantize (using MiniSOM for color quantization)

- poisson

- dither (will randomly choose between Floyd-Steinberg dithering and Bayer Dithering)

- s&p (salt & pepper)

- speckle

- clean (passes the clean image, it is recommended from my tests to have this among the options so it doesn't overfit to always removing noise, even if the image was clean to begin with)

"lr_noise", "lr_noise_types2" is a secondary noise type, in case two noises need to be combined in sequence (ie. gaussian + JPEG, quantize + dither, etc)

Blur ("lr_blur", "lr_blur_types"), uses standard OpenCV algorithms:

- average

- box

- gaussian

- bilateral

- clean (same as with the noise)

"lr_noise_types", "lr_noise_types2", "hr_noise_types" are variables where one of the options in the list will be chosen at random, so if you put one of the types more than once, it will have a higher probability of being chosen

"lr_downscale", "lr_downscale_types" are for on the fly LR generation, scaling the original HR by the scale selected for training the model

- By default, randomly uses one of the OpenCV interpolation algorithms to scale, from cv2.INTER_NEAREST, cv2.INTER_LINEAR, cv2.INTER_CUBIC, cv2.INTER_AREA, cv2.INTER_LANCZOS4 and cv2.INTER_LINEAR_EXACT, but can be changed in the JSON to use only the desired ones

"hr_crop" will randomly scale an HR image by a factor from 1x to 2x and crop it to the size set in "HR_size" and then, generate the LR image. It could potentially be used to skip cropping the HR images a priori altogether, it will take any image size and randomly crop it to the correct size.

"hr_noise", "rand_flip_LR_HR", "lr_cutout" and "lr_erasing" are experimental:

- "rand_flip_LR_HR" will flip the LR and HR images with a random chance set in "flip_chance". In theory, randomly flipping labels helps in model convergence and I wanted to test if it worked like this

- Cutout

- Random erasing

As a general recommendation, it is probably not the best choice to enable every possible augmentation at the same time, since some options may be pulling the network to opposite directions (ie, denoising will introduce blur that reduces high-frequency information, while blur will introduce sharpness/high frequency/noise that's in the opposite direction), so the result will not be the best at anything, but only average. If there's interest to know how a model will all options enabled performs, this model has been trained for 50k iterations in such manner.

The data augmentation can easily be applied on the CPU along with any other steps during data loading (Dataloader). By implementing this operation on the CPU in parallel with the main GPU training task, the computation can be hidden. For this reason, everything is being done in the Dataloader stage, so it depends on the "n_workers" to do the transformations in CPU, so it doesn't affect training times.