Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Model][VLM] Add Qwen2-VL model support #7905

[Model][VLM] Add Qwen2-VL model support #7905

Changes from 25 commits

0a648b2320df577f96df8fbf2b8bbcaff4ff2185bf60448cb71a77b160c4cbde29ff54ddb713814fe12a89def23e721e60d66d167acd85ed8d762c687ba5edcda300ada03a3f25fb189d01530dfaebfe4e492e532e87db739a1069855c78b091983fb40657177395885bab9ba4587346ffad79f9e7a946d5274176f3116c386f302c64c2176bdefd633dd048369ce7d282c66a14ef94d09b7a4fFile filter

Filter by extension

Conversations

Jump to

There are no files selected for viewing

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I found this part a bit difficult to understand. Could you please write a comment explaining it (or provide a pointer to the relevant paper if any)? Especially, I found

m[i]particularly confusing.There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

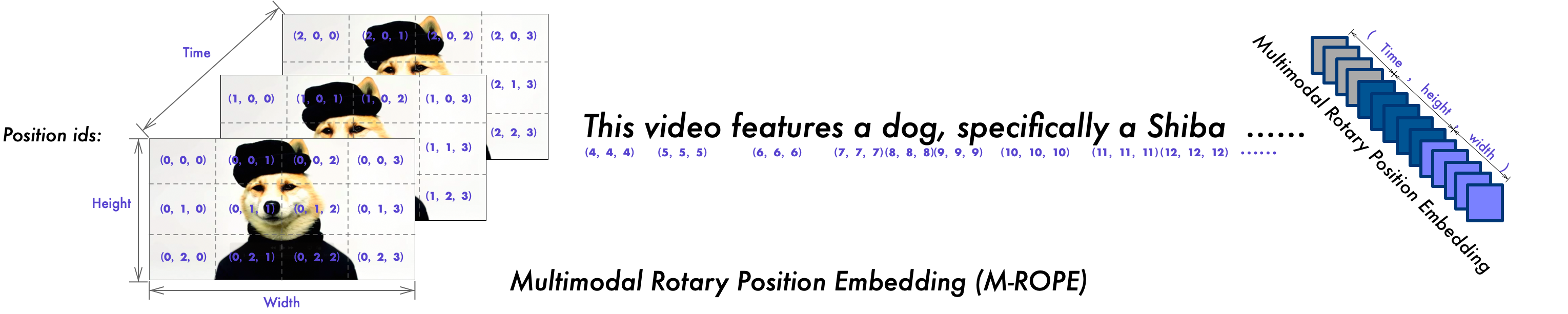

We design a Multimodal Rotary Position Embedding (M-ROPE). By deconstructing the original rotary embedding into three parts representing temporal and spatial (height and width) information,M-ROPE enables LLM to concurrently capture and integrate 1D textual, 2D visual, and 3D video positional information.

mrope_sectionrepresents the number of dimensions occupied by each modality (temporal and spatial: height and width) in the embedding (emb).iindicates which modality (dimension) it refers to. We will extract the corresponding dimensions of the embedding based on the 3Drope_indexand then concatenate them.For a 64-channel

rope_embwheremrope_sectionis defined as (time 16, height 24, width 24), therope_index(1, 2, 3) corresponds to the concatenation ofrope_emb[1][:16],rope_emb[2][16:16+24], andrope_emb[3][16+24:16+24+24].