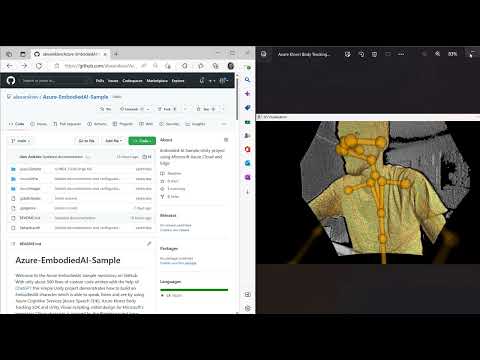

Welcome to the Azure EmbodiedAI Sample repository on GitHub. With only about 500 lines of custom code written with the help of ChatGPT this simple Unity project demonstrates how to build an EmbodiedAI character which is able to speak, listen and see by using Azure Cognitive Services (Azure Speech SDK), Azure Kinect Body Tracking SDK and Unity Visual Scripting. Initial design for Microsoft's legendary Clippy character is inspired by Blender project here.

Make everything as simple as possible, but not simpler.

This Unity sample is intended for Windows Desktop platform. Azure Kinect DK and Azure Kinect Body Tracking SDK are leveraged for Body Tracking (seeing), it is recommended to use NVIDIA GeForce RTX graphics and CUDA processing mode for better performance, however CPU processing mode may also be used otherwise. For speaking and listening Azure Speech SDK is utilized, it is assumed that your PC has microphone and speakers.

This sample is built based on the following foundational GitHub templates:

- Sample Unity Body Tracking Application

- Synthesize speech in Unity using the Speech SDK for Unity

- Recognize speech from a microphone in Unity using the Speech SDK for Unity

Solution architecture of this template is described in detail in the following Medium articles:

Solution demo video can be found here and Code walkthrough is available here.

Please see the list below with the compatibility guidance for the versions of software the sample was developed and tested on.

| Prerequisite | Download Link | Version |

|---|---|---|

| Unity | https://unity.com/download | 2021.3.16f1 (LTS) |

| Azure Speech SDK for Unity | https://aka.ms/csspeech/unitypackage | 1.24.2 |

| Azure Kinect Body Tracking SDK | https://www.microsoft.com/en-us/download/details.aspx?id=104221 | 1.1.2 |

| NVIDIA CUDA Toolkit | https://developer.nvidia.com/cuda-downloads | 11.7 |

| NVIDIA cuDNN for CUDA | https://developer.nvidia.com/rdp/cudnn-archive | 8.5.0 |

Please note that you will need to install Unity, Azure Kinect Body Tracking SDK, NVIDIA CUDA Toolkit and NVIDIA cuDNN for CUDA on your PC. And Azure Speech SDK for Unity and select Azure Kinect Body Tracking SDK, NVIDIA CUDA Toolkit and NVIDIA cuDNN for CUDA DLLs have been already packaged with the project for convenience of setup and configuration.

After you've cloned or downloaded the code please install the prerequisites. Please note that select large files (DLLs) are stored with Git LFS, so you will need to manually download the files listed here.

The default processing mode for Body Tracking is set to CUDA to optimize performance, to configure CUDA support please refer to guidance here and here. However you may change the processing mode to CPU as necessary.

Because this sample leverages Azure Backend service deployed in the Cloud, please follow Deployment guidance to deploy the necessary Azure Backend services. Once deployed, please copy this Auth file to the Desktop of your PC and propagate configuration details based on the deployed Azure Backend services.

Also this sample introduces a number of custom C# nodes for Unity Visual Scripting, after you open the project in Unity please Generate Nodes as described here.

To run the sample please open SampleScene and hit Play. You may also select Clippy game object in the Hierarchy and open SampleStateMachine graph to visually inspect the sample flow.

This sample features One-Click Deployment for the necessary Azure Backend services. If you need to sign up for Azure Subscription please follow this link.

| Capability | Docker Container | Protocol | Port |

|---|---|---|---|

| STT (Speech-to-Text) | mcr.microsoft.com/azure-cognitive-services/speechservices/speech-to-text | WS(S) | 5001 |

| TTS (Neural-Text-to-Speech) | mcr.microsoft.com/azure-cognitive-services/speechservices/neural-text-to-speech | WS(S) | 5002 |

| LUIS (Intent Recognition) | mcr.microsoft.com/azure-cognitive-services/language/luis | HTTP(S) | 5003 |

| ELASTIC-OSS Elasticsearch | docker.elastic.co/elasticsearch/elasticsearch-oss:7.10.2 | HTTP(S) | 9200 |

| ELASTIC-OSS Kibana | docker.elastic.co/kibana/kibana-oss:7.10.2 | HTTP(S) | 5601 |

| GPT-J | HTTP(S) | 5004 |

This minimalistic template may be a great jump start for your own EmbodiedAI project and the possibilities from now on are truly endless.

This template already gets you started with the following capabilities:

- Speech Synthesis: Azure Speech SDK SpeechSynthesizer class

- Speech Recognition: Azure Speech SDK SpeechRecognizer class

- Computer Vision (Non-verbal interaction): Azure Kinect Body Tracking SDK

- Dialog Management: Unity Visual Scripting State Graph and Script Graphs

Please consider adding the following capabilities to your EmbodiedAI project as described in this Medium article:

- Natural Language Understanding: Language Understanding (LUIS), Conversational Language Understanding (CLU), etc.

- Natural Language Generation: Azure OpenAI Service

- More Computer Vision: Azure Kinect Sensor SDK

- Knowledge Base: Azure Cognitive Search (Semantic Search), Question Answering, etc.

- Any integrations with custom AI services via WebAPI: Azure Machine Learning, etc.

Note: If you are interested in leveraging Microsot Power Virtual Agents (PVA) on Azure while building your EmbodiedAI project, please review this Medium article which provides details about using DialogServiceConnector class based on Interact with a bot in C# Unity GitHub template.

Please also review important Game Development Concepts in the context of Azure Speech SDK explained in detail in this article.

- Character Rig and animations using Unity Mechanim

- Azure Backend services deployment options using Bicep and Terraform

- Backend services deployed on the Edge using Helm chart on Kubernetes (K8S) cluster

- Sample template for Unreal Engine (UE) using Blueprints

- Sample template for WebGL using Babylon.js

This template features a simple and yet robust implementation of Lip Sync Visemes using Azure Speech SDK as explained in this video. You can choose to introduce visemes for your character using Maya Blend shapes, Blender shape keys, etc. Then in Unity you can animate visemes by manipulating with Blend shape weights, by means of Unity Blend tree, etc.

Sample context:

Clippy is an endearing and helpful digital assistant, designed to make using Microsoft Office Suite of products more efficient and user-friendly. With his iconic paperclip shape and friendly personality, Clippy is always ready and willing to assist users with any task or question they may have. His ability to anticipate and address potential issues before they even arise has made him a beloved and iconic figure in the world of technology, widely recognized as an invaluable tool for productivity.

Experiment (1/23/2023): Azure OpenAI is deployed in South Central US region and Unity App client runs in West US geo.

| Chat turn # | Sample Human request | Responsiveness test example 1 | Responsiveness test example 2 |

|---|---|---|---|

| 1 | What is Azure Cognitive Search? |

Total tokens: 117, Azure OpenAI processing timing: 90.6115 ms, Azure OpenAI Studio browser request/response timing: 1.56 s | Total tokens: 117, Azure OpenAI processing timing: 96.2115 ms, Azure OpenAI Studio browser request/response timing: 1.40 s |

| 2 | What are the benefits of using Azure Cognitive Search? |

Total tokens: 177, Azure OpenAI processing timing: 128.6817 ms, Azure OpenAI Studio browser request/response timing: 1.41 s | Total tokens: 167, Azure OpenAI processing timing: 73.1746 ms, Azure OpenAI Studio browser request/response timing: 957.91 ms |

| 3 | How can I index my data with Azure Cognitive Search? |

Total tokens: 246, Azure OpenAI processing timing: 168.1983 ms, Azure OpenAI Studio browser request/response timing: 1.34 s | Total tokens: 229, Azure OpenAI processing timing: 229.8757 ms, Azure OpenAI Studio browser request/response timing: 597.07 ms |

When building modern immersive EmbodiedAI experiences responsiveness, fluidity and speed of interaction are very important. Thus GPU Acceleration will be an important factor for the successful adoption of your solution.

We've already described above how we take advantage of GPU Acceleration for Azure Kinect Body Tracking SDK.

GPU Acceleration can also help in the context of any custom Machine Learning models you may want to build, train, deploy and expose as a Web Service (Web API) for consumption in your EmbodiedAI solution. Please find more information about Building and Deploying custom Machine Learning models in Azure Cloud and Edge in these Medium articles: here and here. PyTorch is a popular open source machine learning framework which allows you to build such models and has built-in support for GPU Acceleration. When building and deploying custom models in Azure Cloud you can take advantage of Azure Machine Learning capabilities. When dealing with Hybrid and Edge scenarios you may consider Azure Stack managed appliances, for example, Azure Stack Edge Pro with GPU as described in these Medium articles: here and here. For the latter, you may also need to build your own Docker images for models training, running processes optimization or real-time inference. In the table below we provide a handful of options for GPU Accelerated PyTorch applications (MNIST example) using NVIDIA GPUs with CUDA support which may be great for training and/or inference.

| Base Docker Image | Description | Dockerfile |

|---|---|---|

| python:3.10 | Python | Link |

| nvidia/cuda:11.6.0-cudnn8-devel-ubuntu20.04 | NVIDIA CUDA + cuDNN | Link |

| pytorch/pytorch:1.13.1-cuda11.6-cudnn8-devel | PyTorch | Link |

| mcr.microsoft.com/azureml/openmpi3.1.2-cuda10.1-cudnn7-ubuntu18.04 | Azure ML (AML) | Link |

| nvcr.io/nvidia/pytorch:23.02-py3 | NVIDIA PyTorch | Link |

| mcr.microsoft.com/vscode/devcontainers/anaconda | VSCode Dev Container | Link |

To build Docker images please use docker build -t your_name . command. To run Docker containers with GPU acceleration please use docker run --gpus all your_name command.

Reference local setup: A laptop with NVIDIA RTX GPU (great for training), a desktop with 2 NVIDIA GTX GPUs and Azure Stack Edge Pro with NVIDIA Tesla T4 GPU (great for inference).

This code is provided "as is" without warranties to be used at your own risk.